Page 2 of 4

Posted: Sun Jun 15, 2008 9:49 pm

by sinbad

Nice work. I think you do need to prove that you can emit triangles as well though, just in case there is an issue and it's not just your shader code.

Posted: Tue Jun 17, 2008 6:12 pm

by Noman

Note to self : When something is wrong with your assembly code, don't try to fix it at the assembly level. Had a silly mistake in the cg code. Fixed it and the ASM shader now emits triangles as well. GL rendersystem done until further notice =)

Scripting interface coming along nicely as well, will probably have commits of something working this weekend. I hope to wrap it up soon and start coding demos before the end of this month. If this keeps up, I'll probably have time to get to some (if not all) of the optional stages...

Posted: Fri Jun 20, 2008 2:07 pm

by Noman

Scripting interface finished! (I think).

The demo now only has the names of the materials for the swizzle operations (implemented both ASM and GLSL in scripts to check).

This is how they look like :

The GLSL geometry shader material :

Code: Select all

vertex_program Ogre/GPTest/Passthrough_VP_GLSL glsl

{

source PassthroughVP.glsl

}

geometry_program Ogre/GPTest/Swizzle_GP_GLSL glsl

{

source SwizzleGP.glsl

input_operation_type triangle_list

output_operation_type line_strip

max_output_vertices 6

default_params

{

param_named origColor float4 1 0 0 1

param_named cloneColor float4 1 1 1 0.3

}

}

material Ogre/GPTest/SwizzleGLSL

{

technique

{

pass

{

vertex_program_ref Ogre/GPTest/Passthrough_VP_GLSL

{

}

geometry_program_ref Ogre/GPTest/Swizzle_GP_GLSL

{

}

}

}

}

And the simpler, ASM shader material :

Code: Select all

geometry_program Ogre/GPTest/Swizzle_GP_ASM asm

{

source Swizzle.gp

syntax nvgp4

}

material Ogre/GPTest/SwizzleASM

{

technique

{

pass SwizzleASMPass

{

geometry_program_ref Ogre/GPTest/Swizzle_GP_ASM

{

}

}

}

}

The demo still has the same functionality, but I changed the GLSL program to output lines so I could distinguish the two, and the amount of code is much smaller, due to loading the material from scripts.

Posted: Fri Jun 20, 2008 2:08 pm

by Noman

Moving on, the next thing I'm not sure about is reporting errors. The addition of geometry shader adds many erroneous material definition possibilities. Mixing ASM with GLSL, GLSL geometry shader without a vertex shader, etc.

What's the best way to deal with this? I'm thinking that the technique should fail loading and a log message should be sent.

The problem is - all of these checks are rendersystem specific. DX10 might allow things that OpenGL doesn't. I don't think that the render system gets access to a pass as a whole in order to decide if it supports it. I can't check the combination of GPU programs when they are loaded, so how will I report these errors before runtime?

Any ideas for this?

However, this can be addressed later int he project and does not prevent me from moving on. Since scripts are now working, I can now move on to coding the more impressive demos. Nvidia's IsoSurf example will be my first one.

I'm thinking about changing the order of my tasks and doing the CG support task before the demo task, in order to code the demos in CG (two reasons - I like the language more than GLSL, and future compatibility for the demos for when Nvidia adds a DX10 profile). I will probably look into it a bit this weekend and decide.

Posted: Sun Jun 22, 2008 5:46 pm

by Noman

Right.

I was going over the CG plugin code to try to estimate how much it would cost me to add geometry shader support.

I noticed that it didn't have any profile specific code, and that it just took the supported profiles from the rendersystem (who are in charge of also declaring support of the cg profiles names) and used them.

So, about 10 lines of code later (adding gp4gp and gpu_gp profile declaration to gl rendersystem)...

Let there be CG geometry program support in ogre!

Code: Select all

geometry_program Ogre/GPTest/Swizzle_GP_CG cg

{

source SwizzleGP.cg

entry_point gs_swizzle

profiles gp4gp gpu_gp

}

material Ogre/GPTest/SwizzleCG

{

technique

{

pass SwizzleCGPass

{

geometry_program_ref Ogre/GPTest/Swizzle_GP_CG

{

}

}

}

}

and

Code: Select all

// Geometry pass-through program for colored triangles

TRIANGLE void gs_swizzle(AttribArray<float4> position : POSITION)

{

for (int i=0; i<position.length; i++) {

emitVertex(position[i], float4(1,0.5,0.5,1):COLOR0);

}

restartStrip();

for (int i=0; i<position.length; i++) {

float4 newPosition = position[i].yxzw;

emitVertex(newPosition:POSITION, float4(0.5,0.5,1,1):COLOR0);

}

restartStrip();

}

I slightly changed the hard-coded colors to further distinguish between the different materials. You can now (via commenting out code, this demo won't make it into ogre head so its playplen-like quality) select between the asm,glsl and cg versions of the swizzle geometry shader, and they will all work.

Rejoice!

I'm pretty glad that i decided to wrap up ASM program support in the GL rendersystem before moving on...

Posted: Mon Jun 23, 2008 12:38 pm

by tuan kuranes

So we can have all the shiny capabilities latest profiles using latest CG ?

Can you run the DXT-ycog compression CG demo of nvsdk10 gl for instance or does other parts of Ogre need to be updated to support "integer" Render Target , or 'integer texture adressing' or other stuff ?

Posted: Mon Jun 23, 2008 5:42 pm

by Noman

As far as the CG compiler is concerned, the answer would be yes. The cg compiler receives cg code as input and emits ASM (in our cases) code as output.

The question is - can ogre support the program that the cg compiler created?

The scope of this SoC project is just adding support for geometry shaders in Ogre. I am not looking into any other DX10-level features. If ogre can create the handle for the required render target, there is no reason (that I can see) that will prevent you from running it on cg programs compiled.

Posted: Tue Jun 24, 2008 6:10 pm

by Noman

While im still deciding which demos to port (currently thinking about IsoSurf and ParticleGS), I have another change im considering doing.

So far, out of the 4 geometry program options i've encountered (cg, GL ASM, GLSL, HLSL), only one of them (GLSL) requires you to manually input the input primitive type, output primitive type and max output vertices.

It seems that contrary to what I thought when I implemented GLSL, this is the exception and not the normal case.

I'm thinking about moving the three properties i've exposed (InputOperationType, OutputOperationType and MaxOutputPrimitives) to OgreGLSLProgram rather than the generic OgreGpuProgram.

The information will usually be passed by the material scripts (via StringInterface), and I don't think theres any problem of adding more options to the dictionary when subclassing.

Ogre script people (praetor, sinbad) - is there any technical problem with this?

This will clean up OgreGpuProgram. Also, this method would return garbage information when called on a GpuProgram that contains this information in the shader code. I'll probably do this move by the end of the week.

Its demo time after that

[/b]

Posted: Thu Jun 26, 2008 10:29 pm

by sinbad

Yeah, that's fine - there are plenty of cases where only specific GpuProgram subclasses have particular parameters. The script compiler doesn't get involved in parsing them really, except for splitting the text up into symbols - it will pass anything it doesn't understand to the GpuProgram to see if it will process it via the StringInterface.

Posted: Fri Jun 27, 2008 4:16 pm

by Noman

Ok. Refactored them into OgreGLSLProgram. Much cleaner now (don't have to be afraid to give the user garbage information and such).

Now on to the demos, i decided that the first demo I'll implement will be the CG isosurf example (

http://developer.download.nvidia.com/SD ... mples.html ).

Big question - Add render-to-buffer support to the GSoC project?

The next issue I'm currently thinking about is a rather big one. I wanted to go for the particleGS example from the DX10 sdk, but it is currently unimplementable in ogre. The reason is there is no render-to-buffer support in Ogre (Stream-Out in DX, Transform-Feedback in GL).

I'm seriously considering adding support for this feature as well. I'm pretty much ahead of schedule and have already finished up all the features I declared "essential" to the GSoC besides the high quality demos.

Geometry shaders and render-to-buffer (does this feature have a generic name?) pretty much go hand in hand, as it allows you to save the geometry dynamically generated by the geometry shader for use in the next frame.

I don't know how much geometry programs will help people without render-to-buffer support.

Lets set things straight - the next step is to implement the IsoSurf demo. By the time I'll perhaps start adding support for this, the essential deliverables for this GSoC will already be finished.

Of course, if I do decide to go for this feature, a whole discussion on how such a feature can be generically represented in Ogre needs to happen.

What do you guys think?

Posted: Fri Jun 27, 2008 4:23 pm

by tuan kuranes

Geometry shaders and render-to-buffer (does this feature have a generic name?) pretty much go hand in hand

Would say the same

Posted: Sat Jun 28, 2008 12:35 pm

by Assaf Raman

Well, lets add render-to-buffer support. If you need my help with it - I will help.

Posted: Sat Jun 28, 2008 7:11 pm

by Noman

Ok.

Not 100% sure I'm going to add it to this project, but I think it depends on the design phase of it.

Because it takes time and I would like for the ogre team and community to be involved (some API definition is going in here as well), I will start the discussion in parallel to the implementation of the Isosurf sample (which has already started).

Render-to-buffer API

The first thing I considered was to have a new subclass of Ogre::RenderTarget called Ogre::RenderBuffer. I quickly discarded this idea because the API has many irrelevant requirements and it is not really a render target (doesn't contain viewports and such).

In fact, there is pretty much a 1-to-1 relationship between a renderable (vertex+index buffer) to a render buffer target.

In a way, I can see this feature being someone parallel to the animation framework in a way - it updates the vertex & index data of an object every frame before the rendering takes place, and is often sequential.

There are two conceptual main modes of operation that we need to support :

1) Reset-every-frame mode. SrcBuffer + Shader = DstBuffer. SrcBuffer is used as the source next frame.

2) State-machine-ish mode. SrcBuffer + Shader = DstBuffer. DstBuffer is used as the source next frame.

The biggest question I'm asking myself is how generic should this be.

None-generic solution (but less obtrusive)

Theoretically, we can have a class that is a proxy Ogre::Renderable - it receives an Ogre::Renderable as input, and also implements Ogre::Renderable itself. When getRenderOperation is called, it will return the transformed buffers rather than the originals. It will have update() and reset() methods, which will update the destination buffers and return them to their original states, respectively.

These methods will either be triggered manually (by a framelistener in beginFrame, for example) or automatically (which will mean that a singleton that keeps track of all the render-buffers has to exist and called every frame). I believe that the animation framework in ogre operates in similar matter.

Of course, a boolean resetEveryFrame flag can be used to easily distinguish between the conceptual modes of operation that i stated earlier.

The problem with this mode is that you need to write quite a bit of code in order to use it. Who places the proxy renderable in the scene graph instead of the normal one? You will need to subclass MovableObject for object types (for example, ProceduralBillboardSet would be required for the ParticleGS sample) for each object type that uses this feature.

Generic solution (but more obtrusive)

This solution is similar to the first solution, but with a big difference.

Instead of the proxy Ogre::Renderable, one of the generic ogre classes (I'm thinking about Ogre::MovableObject, but Ogre::Renderable might also be a possibility) will handle the RenderBuffer functionality. Then, for every MovableObject (entity, billboard set, etc) you will be able to set a RenderBufferGeometry material (via a setRenderBufferGeometry call, along with setResetProceduralGeometryEveryFrame, resetRenderBufferGeometry, setRenderBufferGeometryAutoUpdates etc), and it will take care of the rest.

This will allow us to easily integrate geometry-generating materials without adding MovableObject-specific code (think of the wonders it can to do Ogre::ManualObject - lots of the math that generates the geometry can be shaderized).

However, it is very obtrusive (Ogre::MovableObject is a core class in Ogre) and raises some questions with some MovableObjects (ie, what happens to the render-to-buffer geometry when the MovableObject decides to internally regenerate its vertex & index buffers?)

I believe that the none-obtrusive solution is the better one, and MovableObject types that can use this feature (BillboardSet and ManualObject are the first two that come to mind for me) will implement related functionality.

I was mainly thinking out loud in this post. I'd love to get as many comments as possible. I'll probably read this myself again tomorrow, and continue to design the solution.

Comments? =)[/b]

Posted: Mon Jun 30, 2008 2:19 pm

by tuan kuranes

In fact, there is pretty much a 1-to-1 relationship between a renderable (vertex+index buffer) to a render buffer target.

DX10 sort of promoted a concept of 'casting' buffer type (called '

texture view'), GL does that with "

Texture Buffer Object" I believe.

Idea being to prevent user having to copy results from one buffer to another 'pre-casted'.

So conceptually, Ogre should be able to reflect that somehow.

Perhaps current RenderTarget should be renamed more explicitly "FrameRenderTarget" and new one added would be "RenderBufferView", both referencing/using/encapsulating a RenderBuffer, which would reflect that 'typeless buffer' idea ?

Generic solution (but more obtrusive)

Generic solution seems the way to go to me.

Could 'geometry_buffers on/off' or 'initial buffer on/off' be a parameter of material pass, so that user can specify things on a per-pass basis ?

Best would be to somewhat extend/copy/achieve something like the 'compositor' scripting flexibility. (could be renamed "Frame Compositor" and "Geometry Compositor")

Posted: Mon Jun 30, 2008 4:12 pm

by Noman

I think you misunderstood part of my post. Buffer 'casting' is irrelevant here. The render-to-buffer technique outputs vertex and index buffers. No need to cast between different types of buffers.

I don't think we should go anywhere near these features in this SoC (Ogre in general can, just not here).

As for the two solutions. I'm not saying that I like the less generic solution because its an easier way out, I just think that the generic one has many pitfalls.

I understand why you compare this feature to the compositor framework, but I disagree. While API-wise this feature is something that you can add to any vertex+index buffer combination, logically speaking there is a tighter coupling between the shader and the vertex+index data that it will run on.

This is what differs between the RenderToBuffer feature and the compositor framework IMO. In most cases, compositors can be written regardless of the scenes they are compositing. Most of Ogre's sample compositors can be applied to everyday applications and work as expected out of the box. If I apply the vertex+geometry shader from the particleGS sample to an entity, I'll get garbage! I believe that this is the normal case in the render-to-buffer world.

This is the main reason that I think that RenderToBuffer shouldn't be part of Ogre::Renderable or Ogre::MovableObject, but a facility that the subclassers of those two classes can use if needed. Perhaps classes like Ogre::BillboardSet will have an out-of-the-box option to use a RenderToBuffer material (or perhaps a new, simpler object type that only contains a vertex and geometry shader), but should Ogre::Entity have this option? What will happen when software blending updates the vertex buffer?

I'm not making decisions yet, just debating...

Perhaps a thread should be opened in developer talk?

Posted: Mon Jun 30, 2008 4:31 pm

by tuan kuranes

The render-to-buffer technique outputs vertex and index buffers. No need to cast between different types of buffers.

Idea of my remarks was more about understanding the why of 'texture view', 'typeless buffer' and all... and therefore make sure to have that in mind when thinking of design.

compositors can be written regardless of the scenes

Like ShadowGS or catmull-clarck mesh subdivision GS works for any mesh...

If I apply the vertex+geometry shader from the particleGS sample to an entity, I'll get garbage!

Same if you use compositor that does "deffered rendering" and waits for a "normal buffer" or a "depth buffer" as inputs...

I believe that this is the normal case in the render-to-buffer world.

GS are at its early stage, we lack samples application...

This is the main reason that I think that RenderToBuffer shouldn't be part of Ogre::Renderable or Ogre::MovableObject, but a facility that the subclassers of those two classes can use if needed.

I even would go deeper and add that to 'VertexData' class, and using material current pass option (like I stated above), the renderer would choose at render time between "VertexData" internal Vertex Buffers. (initial or generated.)

Posted: Mon Jun 30, 2008 5:31 pm

by Noman

I created a

thread in developers talk debating this feature in order to keep this thread on track with the main focus of the SoC project. Please discuss the feature in that thread only.

If I do decide to add it as part of the SoC, the development talk (and not the design discussion) will continue here.

Posted: Tue Jul 01, 2008 4:05 pm

by sparkprime

I haven't read the whole of this very interesting thread, but would it be possible to extend/replace the Static/InstancedGeometry mechanisms so that they allow addition/removal of entities from the batch?

Posted: Wed Jul 02, 2008 7:09 am

by Assaf Raman

@sparkprime: This is not related to "addition/removal of entities from the batch".

Posted: Wed Jul 09, 2008 6:29 pm

by Noman

Sorry for the lack of replies. I was abroad for a week and didn't work on the SoC project.

Midterm evaluation is coming up. I'm ahead of the proposed schedule but still need to get done with it. I hope to get the IsoSurf demo running this weekend so I can move on to the next stage which will be either

- Another demo?

- Render-To-Buffer support?

- DirectX10 render system integration?

I'm currently thinking about the second option, because it will allow me to implement the particleGS sample which requires it. Which also means we finish the SoC with a demonstration that shows usage of that as well.

I pretty much abandoned the shadow technique idea (which was optional anyway) because I feel the Render-To-Buffer is much more important.

So, unless things change (this is where you - the community - come into play) the roadmap will be

- Finish IsoSurf

- Render-To-Buffer support

- ParticleGS demo (impressive gs+rtb demo)

- DirectX10 rendersystem (if time permits)

I think this is enough work until the end of the SoC, and if i finish all four (which i think I will) it will be a huge success.

Posted: Thu Jul 10, 2008 7:13 am

by Assaf Raman

Sounds good, the DX10 should be last - I will do the work if you won't have the time.

Posted: Tue Jul 15, 2008 9:44 pm

by Noman

For the first time in the project, something is going harder than expected.

The IsoSurf project is harder to port than I thought. Lots of unexpected issues, and its very hard to debug since the symptom is usually empty visual output.

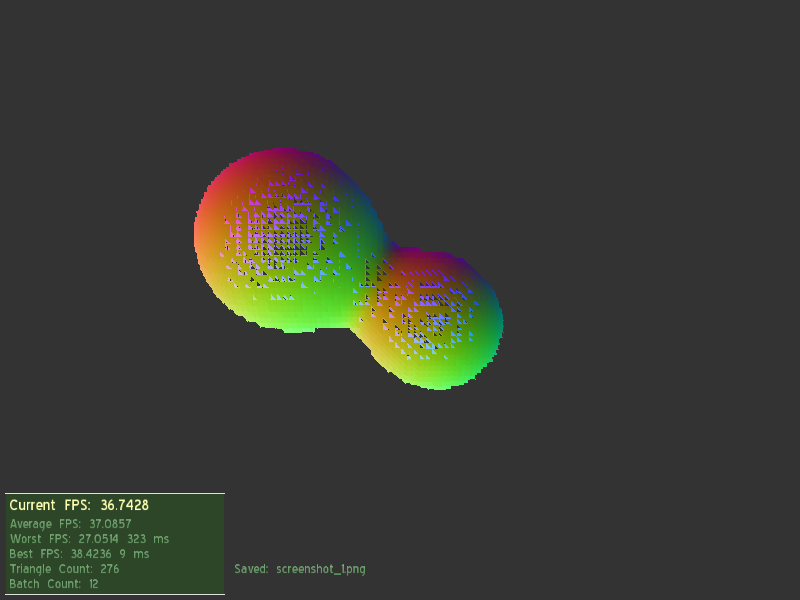

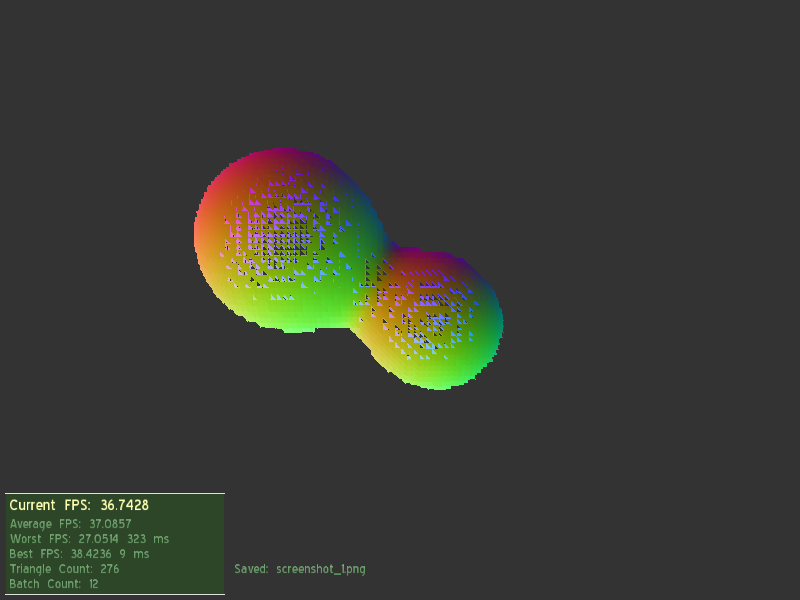

Progress is slowly being made... This is what I have so far :

The colours are debugging so nevermind them. The real problem is that the balls arent smooth yet.

The original CG shader uses texRECT to address the texture. Does anyone know if I can use this shader call to sample normal textures (the demo uses a special kind). If not I might rewrite it to a normal tex2D call...

Posted: Tue Jul 15, 2008 10:42 pm

by PolyVox

I don't have an answer to your question, but I just wanted to say congratulations on your work so far. This is a very cool project and I'm following it with interest!

Posted: Wed Jul 16, 2008 10:45 pm

by Noman

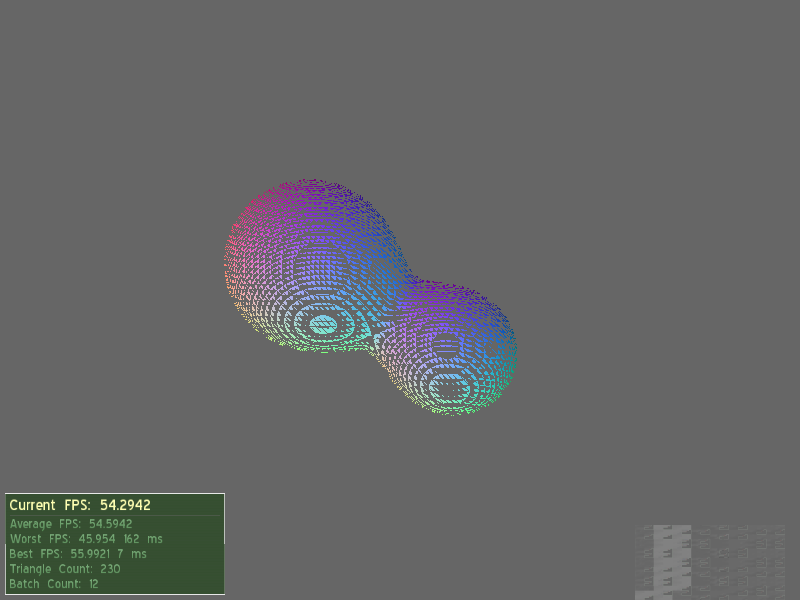

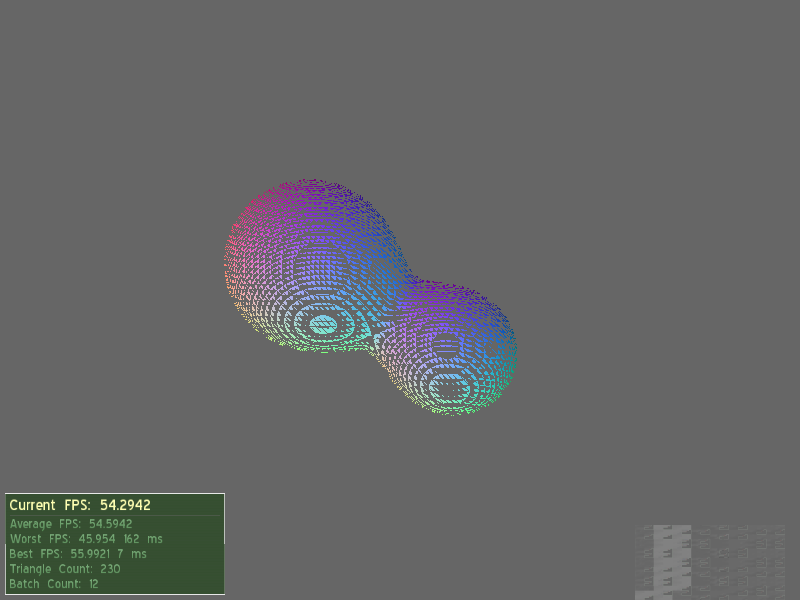

More baby steps toward the solution

Tesselation generates smooth shape, but now triangles are missing. Getting closer and closer

Posted: Fri Jul 18, 2008 2:50 pm

by Noman

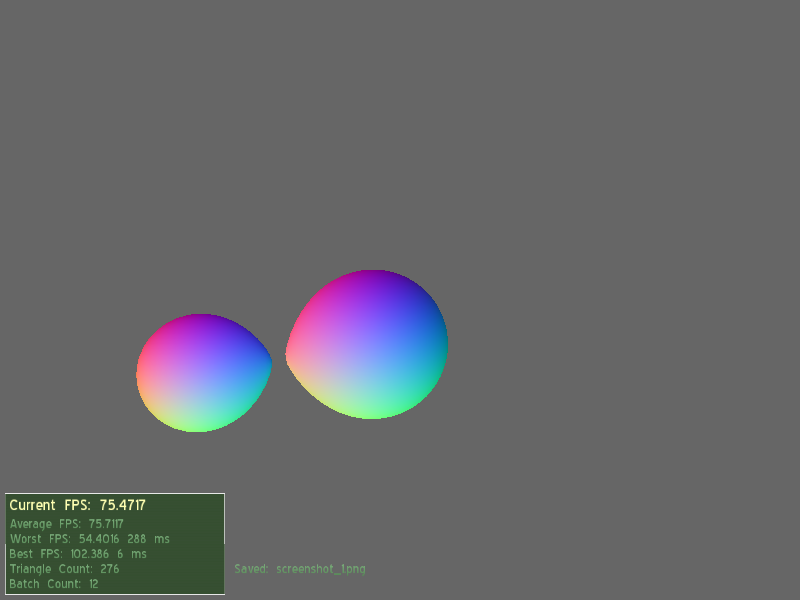

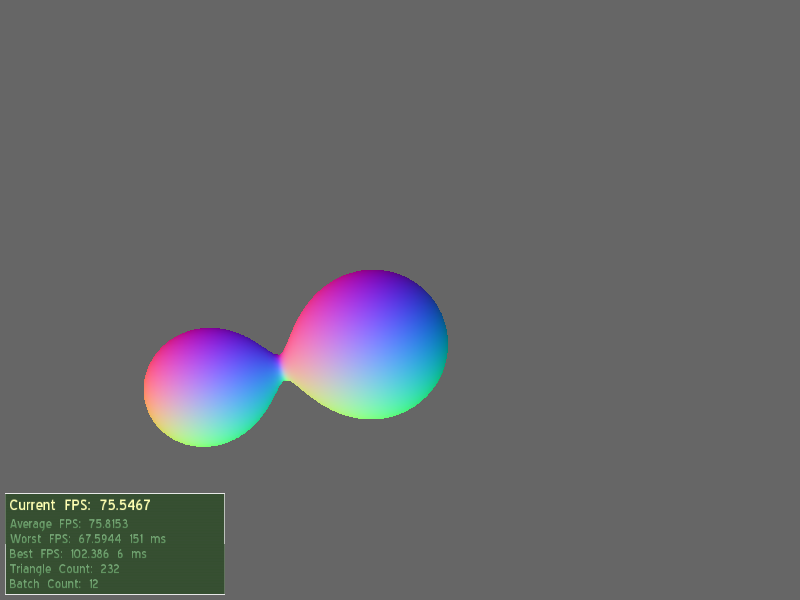

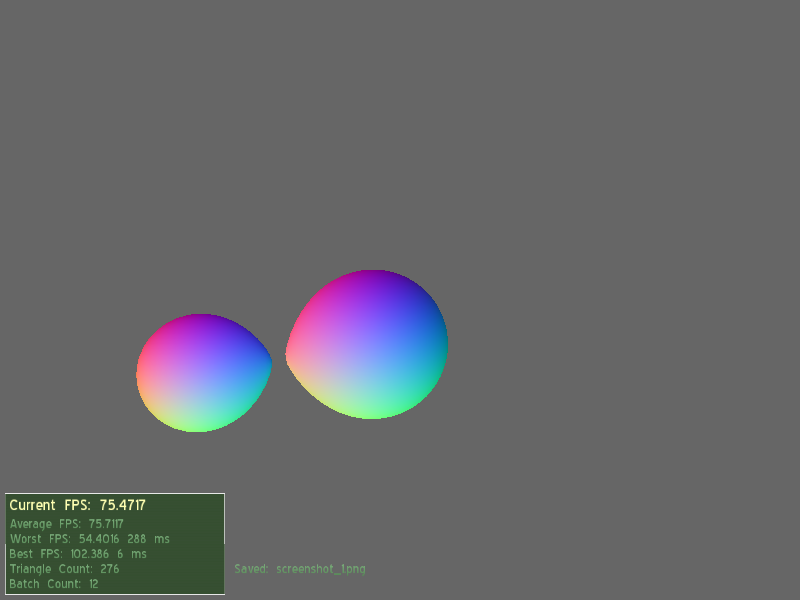

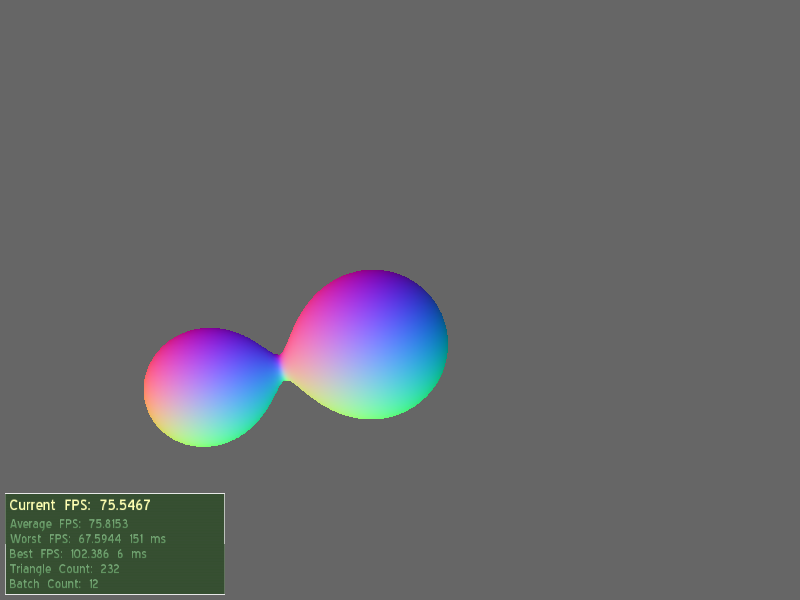

Almost done...

Very little work left to be done. Just have to figure out why the normals aren't getting setup correctly and final code cleanups.

Important Question

One downside is that I had to hack the GL rendersystem to get this to work.

The primitive that gets rendered has to be GL_LINES_ADJACENT_EX. The question is how do we incorporate the ability to send this to ogre?

Two options :

1) Add the required new possibilities to the RenderOperation enum. This makes sense because these types are becoming generic. See

http://msdn.microsoft.com/en-us/library ... 85%29.aspx .

2) Less obtrusive solution. Since these new render operations have no use while not using geometry shaders (

Do they?) perhaps a 'Needs adjacency' flag can be added to the geometry shader. Then, when the render system decides on the native primitive type to use, it checks if the pass has a geometry shader. If it does and the geometry shader has the flag turned on, it can send the modified enum value to the draw call.

IMO, if there isn't a reason to use adjacency information unless using geometry shaders, there is no need for the RenderOperation type to be modified.

What do you guys think?