As part of the GSoC Geometry Shader Project I decided to add another important feature to the project - Render To Vertex Buffer support.

The feature allows you to save the contents of the vertex+geometry pipeline to a vertex buffer, and then use it later on. You can also use that output as the input of the next run, giving us iterative geometry on the GPU.

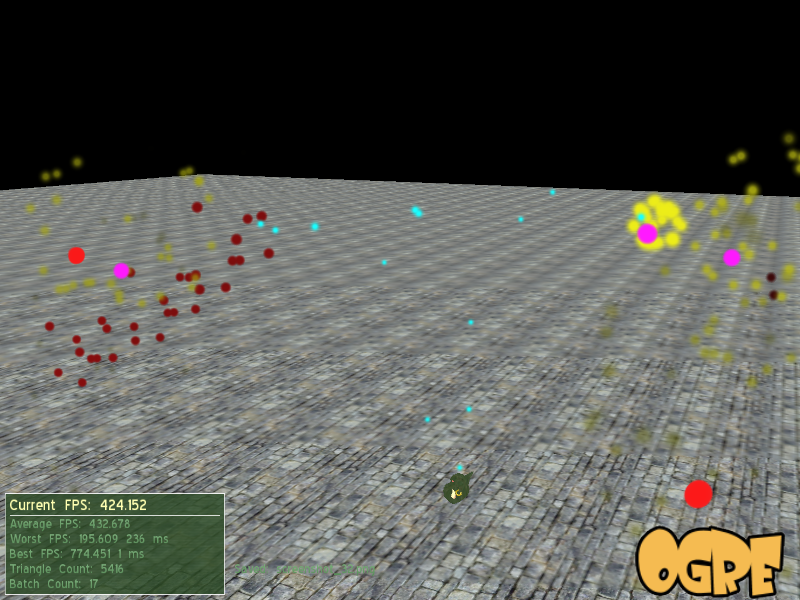

One of the possibilities of this feature is GPU only particle systems, like microsoft did in their ParticleGS demo. So, I decided to add the API to Ogre, implement it in the OpenGL render system and create a port of the ParticleGS demo in Ogre.

And for the results :

Note that all of the geometry is completely generated in shaders! I do not use Ogre::ManualObject nor do I write vertex/index buffers manually. The only geometry that I do generate is the launcher particle that starts the system.

Particle GS Demo download

There is a binary download for those who want to try it out for themselves. You can download it here.

Demo requirements : Geforce 8 class (or higher) graphics card. ATI did not implement geometry shaders in their OpenGL drivers.

For those of you who aren't privileged enough to have a compatible card, you can also check out the video of the demo on YouTube, although my video conversion skills don't really make it look good.

If you want more in depth information about the project, check out the discussion thread, or even grab the source code a the SVN branch :

https://svn.ogre3d.org/svnroot/ogre/bra ... eomshaders

This pretty much marks the end of the google project, which I can now say has been a huge success for me.

Let the fireworks begin!